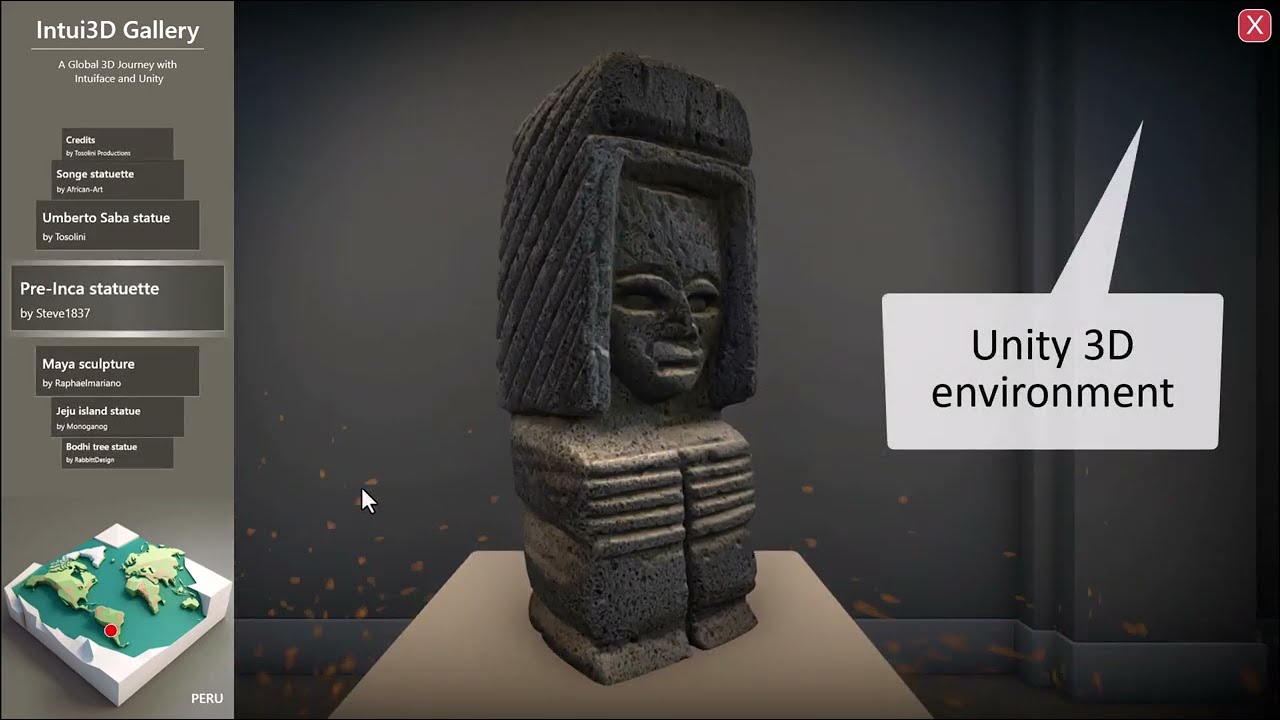

Welcome to the Intui3D Gallery, an exploration of the synergistic potential of Unity and Intuiface. This prototype showcases an integrated experience combining the interactive user interface of Intuiface with the dynamic 3D environment of Unity. With a simple selection from the Intuiface carousel, Unity directs the camera to the chosen artifact, offering flexible object rotation and viewpoint adjustment. Our gallery stands out with its global reach – each artifact is a 3D scan, created by individuals around the globe using the Polycam iOS app, showcasing a diverse array of cultural heritage in a single digital space.

How it Works:

Our prototype operates through two separate applications: Intuiface running in the foreground, and Unity running in the background. The Intuiface experience features a vertical asset flow sourced from an Excel database, which includes data such as artifact name, person who scanned it, location, and two URLs serving as remote actions.

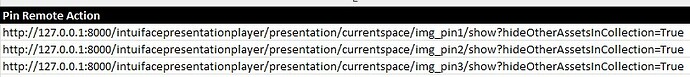

These URLs are integral to our prototype’s operation. The first, the Pin Remote Action, is a convenient trick that allows us to show geographic locations on a 3D world map using a dynamic command line created in Excel.

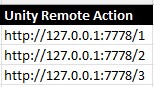

The second, the Unity Remote Action, sends a command to the Unity app running in the background.

Thanks to Intuiface’s transparent background support, users can interact with both Intuiface and Unity simultaneously, creating a smooth and immersive user experience.

The Unity app showcases a 3D gallery featuring artifacts captured using iPhone devices. The capability to rotate the artifacts, adjust viewpoints, transition smoothly, and apply lighting and particle effects, are all accomplished within Unity. The challenge was to manage the 3D space from the Intuiface experience.

The integration between Unity and Intuiface is achieved by calling a URL from Intuiface and reading that URL in Unity using HttpListener. We assigned a unique URL to each specific action to ensure Unity executes the intended function based on the received URL. This allows us to synchronize Intuiface with Unity and perform various actions as the user scrolls through the asset flow.

Try it Out:

We invite you to download and experience the Intui3D Gallery on your own PC.

- Download and unzip the Unity app. (50MB)

- Download and unzip the Intuiface XP (10MB)

- Launch the Unity file, Museum.exe. Please note you might receive a security warning from the Windows firewall. Simply select “Allow.” The app will launch in full-screen mode.

- Run the Intuiface experience, scroll through the asset flow, rotate artifacts, and adjust your viewpoint.

- Tap the experience title to reset the gallery view and the “X” button to quit the Unity app.

Notable Points:

- This prototype builds on our previous Tosoville demo, but with greater efficiency by removing the need for middleware.

- Asking @Seb for confirmation, but I’m pretty sure this demo is compatible only with Player for Windows, not Player NextGen.

We’d love to hear from you: What potential use cases do you envision for this kind of integration?