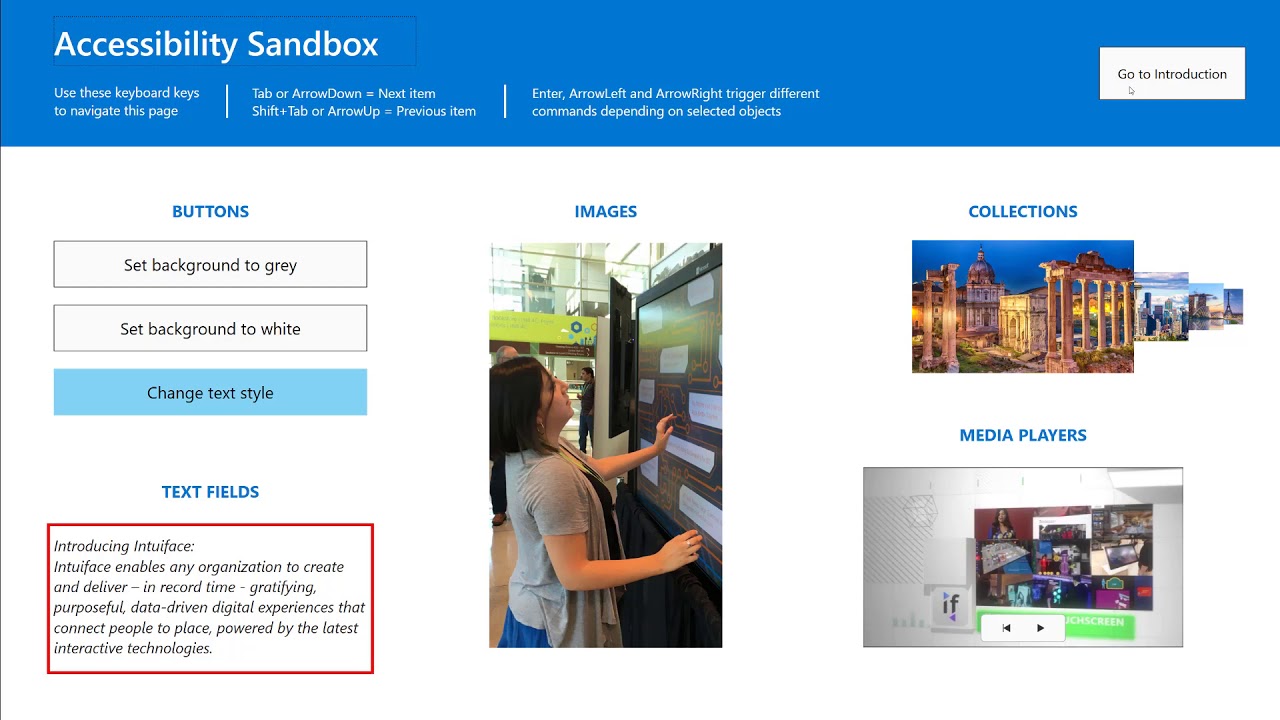

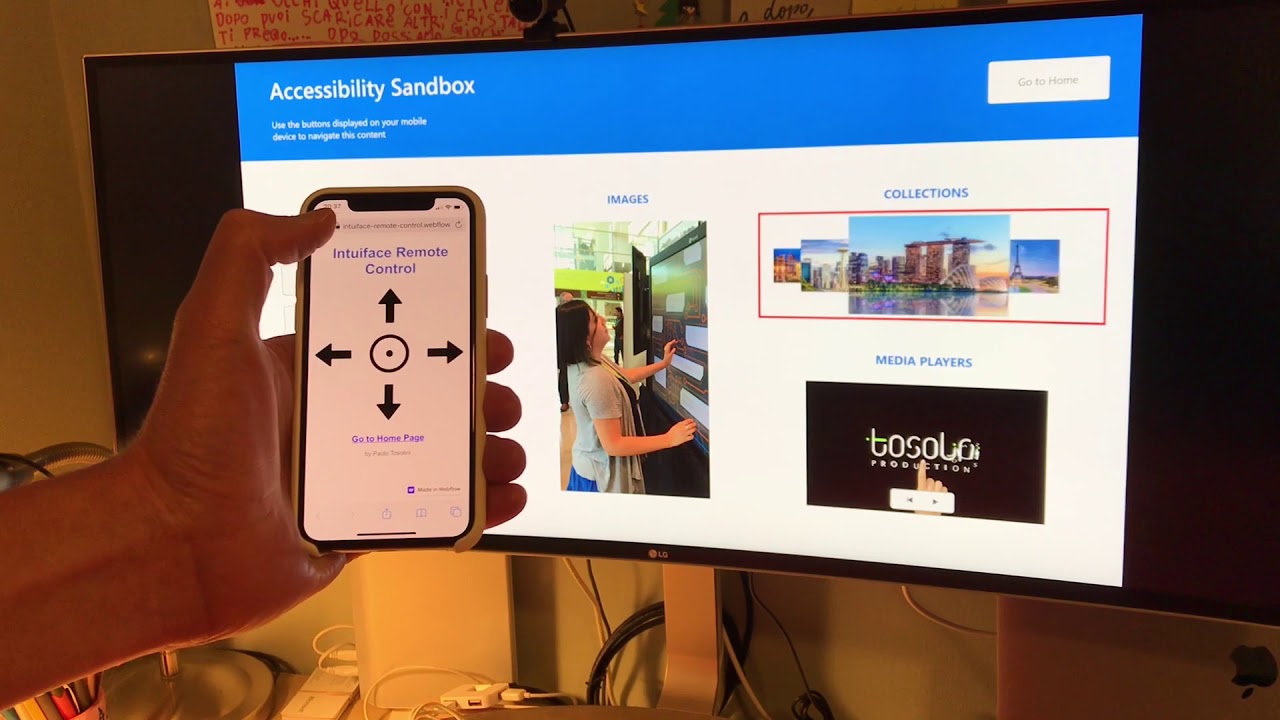

As outlined in this blog post by @geoff, an accessible interactive experience (XP) is an XP that works for as many people as possible. Intuiface offers a variety of features to design inclusive XP, namely Text to Speech (TTS), Speech Recognition, Keyboards Events and Gestures on Assets.

This experiment has been inspired by Microsoft accessibility guidelines, as well as by iOS accessibility implementation. In essence, a touch experience needs to either:

- Support at least one alternative input interface. The alternative input interface cannot require the same senses to use it. Or,

- Provide feedback via various sensory modes

This demo showcases the use of keyboard input, aural feedback via Text To Speech (TTS) and audio cues, and visual feedback for the elements in focus. All this in co-existence with standard touch and mouse interactions.

Challenges and technical implementation

In building this demo, I encountered a few design challenges:

- Where to store the metadata that would be read by the Text To Speech (TTS) IA

- How to read the changing status of certain assets (e.g. a toggle button that is checked) and communicate it back to the user via TTS

- How to have the same keyboard keys perform different tasks

- Make keyboard events work seamlessly with mouse / touch input

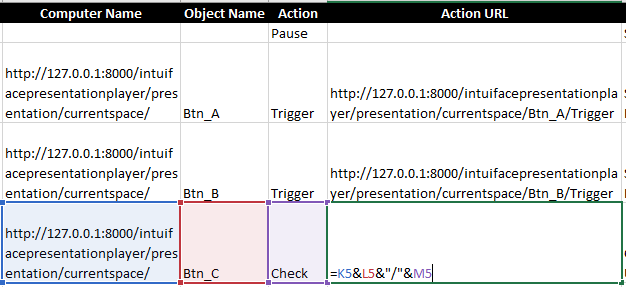

Ultimately, I settled for a solution that keeps most of the data in Excel, the core functionality in buttons living outside the screen on an experience layer, and inevitably some hard coded conditional triggers at the scene level.

To dynamically trigger object actions through Excel, I used formulas to generate remote commands that would be invoked via the Call URL action. This feature is usually used to control third party apps, but you can target the XP itself.

Along the same lines, the TTS string is being formed dynamically to inform the user about the type of object in focus and its status.

This implementation is far from being comprehensive and I applied it only to a handful of Intuiface assets. That said, I hope that the code and methods of this XP will save you some time in your own accessible Intuiface developments.

Special thanks to @Seb and the Intuiface product team for having made available these accessibility extensions to all of us.