I recently acquired an Apple Vision Pro (AVP) and decided to explore its capabilities by creating an Intuiface spatial computing experience (XP) that allowed me to manipulate a 3D model in Mixed Reality (MR). Intriguingly, I performed the entire design process while wearing the headset. Let me share that journey with you.

Hardware Setup

My setup includes an Apple MacBook Pro M3, equipped with Parallels software to run a Windows 11 Virtual Machine. This setup is necessary for running Windows applications, such as Intuiface.

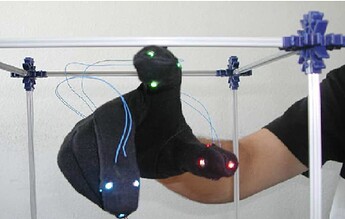

The AVP acts as a spatial computer that integrates digital content into your physical environment, allowing for interaction through eye movements, hand gestures, and voice commands. It can mirror the MacBook’s display onto a high-resolution virtual screen within the headset.

For this experiment, I wore the AVP throughout the entire creation process to fully immerse myself in a MR authoring environment.

The Intuiface XP

The proof of concept involved a simple XP that displayed a 360-degree photo within a 3D sphere. The sphere was initially captured using a 360 camera. I processed the image with Polycam and exported it in USDZ format, which is optimized for 3D and augmented reality on Apple devices. Subsequently, I uploaded the file to my Azure cloud storage and linked it to the 3D model using Intuiface’s OpenWindow IA (thanks @Seb!).

No-Code Spatial Computing

Launching the application was straightforward using the AVP’s Safari browser. A tap on the image downloaded the 3D sphere, which I could then manipulate directly with my hands in a truly immersive MR setting.

Considerations

- The experience of authoring with the AVP on a large virtual display was revelatory. Within minutes, the headset’s presence faded from my awareness, allowing me to interact with my usual keyboard and mouse setup naturally. This capability is particularly advantageous for digital nomads who require a larger display while traveling.

- A limitation I encountered was the lack of parameter support in the OpenWindow IA, which necessitated additional steps to open the 3D sphere.

- Although spatial computing is still in its early stages, I was impressed by Intuiface’s capability to enable the creation of no-code applications, simplifying the process of building MR experiences.

What are your thoughts on this emerging platform? Are you considering exploring this technology?