I’m pleased to announce v2 of the Intuiface Accessibility Sandbox.

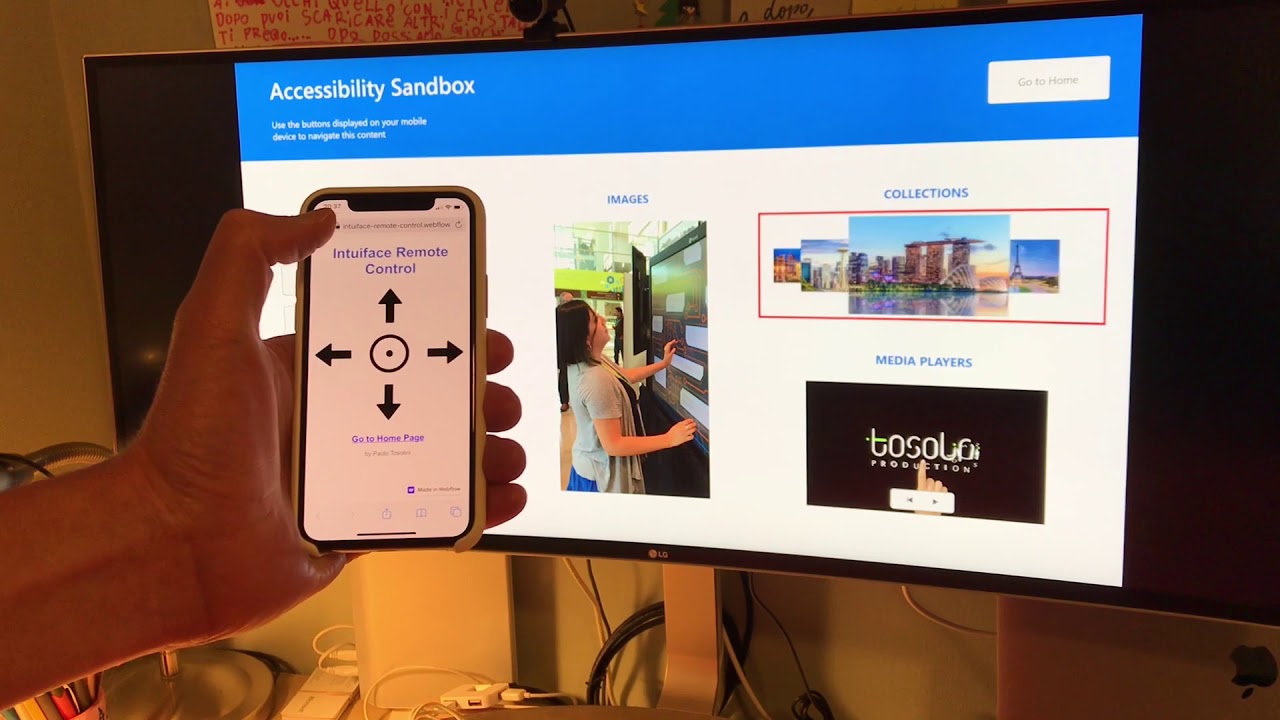

Inspired by @Seb Multi-Modal Experience and the recent announcement of the new Reference Designs, I updated the code in my experimental framework to support touch-less interactions.

This version allows:

- Sequentially moving focus across various interactive elements of a page using keyboard, speech recognition and mobile remote control

- Visually highlighting objects in focus

- Text To Speech (TTS) to read alternative text associated to objects in focus (e.g. button, image)

- Ability to interact with key assets (e.g. normal and toggle buttons, asset flows and video players)

Feel free to use this code in your own projects.