This exploration was inspired by the Interactivity Beyond Touch post and by @Seb’s demo about Web Triggers API. Let’s find out that it takes to remotely control an Intuiface XP using Siri voice commands, both on iPhone and Apple Watch.

iOS Shortcuts

Shortcuts in iOS let you automate sequences of actions so that you can perform them quickly with one tap on a shortcut icon or with a voice command issued to Siri. Android users should have an equivalent feature called Action Blocks.

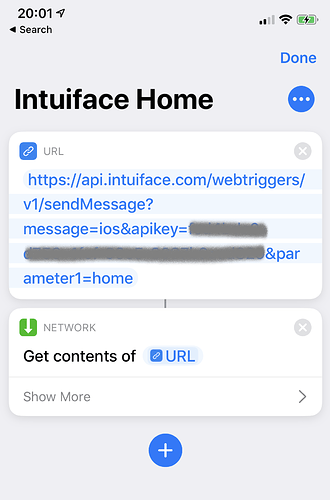

My prototype leverages the Shortcuts app to engage with Intuiface Web Triggers API, and pass along commands to the target Intuiface XP. Here is how they look like on my iPhone:

When I speak, Siri will recognize my voice and look for a matching shortcut with that name (in this case Intuiface Home). Once a match is found, the URL is triggered.

The Intuiface Knowledge Base explains in detail the steps to enable this functionality on your XP, which involves the creation of a unique API key to use in your URL commands.

With your new key, the Intuiface Web Triggers API is ready to accept your calls. They will look something like this:

https://api.intuiface.com/webtriggers/v1/sendMessage?message=NAME_MESSAGE&apikey=YOUR_UNIQUE_API_KEY¶meter1=PARAMETER1

There are more parameters you can add to target specific Players / Experiences, but for simplicity’s sake, I’m not including them.

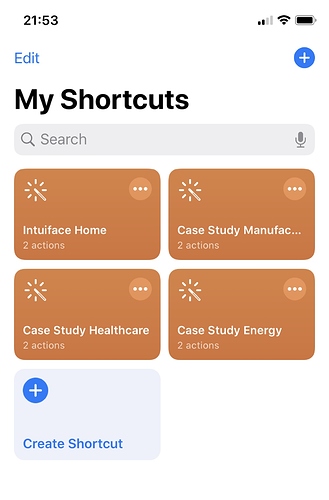

In my demo, I used ios as the message name, and home, manufacturing, healthcare, energy as parameters, to reflect the respective destinations on my XP.

The next step is to create the iOS shortcuts that will trigger the proper URLs (see Youtube tutorials). This is what they look on my iPhone. If you have an Apple Watch, they will automatically sync.

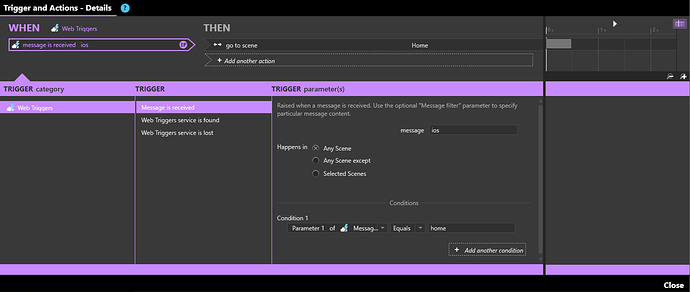

Finally, it’s time to enable your Intuiface XP to listen to Web Triggers. There is a specific IA for that, and here is how I programmed the Intuiface Home command.

And that’s it. Shortcuts can also be associated to events other than voice commands, such as reading RFID tags with your phone or detecting your geographical location. But that’s a topic for future experiments.