We’ve developed FlightVision Pro in collaboration with The Museum of Flight in Seattle. This web application visualizes 3D models of aviation artifacts, which were scanned using mobile devices. Key features:

- AI voice recognition interprets user requests

- 3D models of artifacts are displayed in the Apple Vision Pro headset

- Intuiface platform used for app creation

- Polycam app utilized for 3D scanning

HOW IT’S DONE

We started by 3D scanning the artifacts using an iPhone 15 Pro and an app called Polycam. There are various techniques to capture a 3D model, and depending on the subject, you may choose photogrammetry or LiDAR. We then exported the models into USDZ format, which Apple operating systems natively visualize in AR mode.

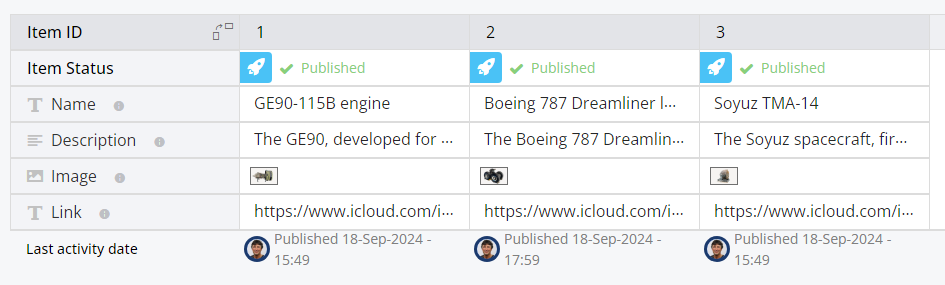

In Intuiface HCMS, we created the database structure for the artifacts (Name, Description, Image, External Link to USDZ file hosted on iCloud).

In Intuiface, we designed a simple UI using the Whisper AI to recognize the user’s voice input, and ChatGPT AI to pass the user input to OpenAI’s GPT-4 model with the following prompt:

This is a system prompt. You are an AI assistant for a museum application that matches voice commands to specific items in the museum’s database. Your task is to analyze the given voice command and determine which item from the database it most closely matches. You should return ONLY the ID of the best matching item, or 0 if there is no clear match.

Database items are in the format:

ID|Name|Description

Here are the database items:

1|GE90-115B engine|The GE90, developed for the Boeing 777, is known for its high thrust, fuel efficiency, and innovative composite fan blades, making it the exclusive engine for key 777 variants and setting a world record for thrust.

2|Boeing 787 Dreamliner landing gear|The Boeing 787 Dreamliner main and nose landing gears are designed, developed, produced and integrated by Safran Landing Systems and offer remarkable performance in terms of weight and strength.

3|Soyuz TMA-14|The Soyuz spacecraft, first launched in 1967, has been a key crew vehicle for Soviet and Russian space missions, including the ISS, and the TMA-14 variant carried the first expanded ISS crew in 2009, later being donated to The Museum of Flight.Rules:

Analyze the voice command for key terms and concepts.

Compare these to the names and descriptions in the database.

Choose the most relevant match based on semantic similarity, not just keyword matching.

If there’s a clear best match, return only that item’s ID (a number).

If there’s no clear match, return only 0.

Do not explain your reasoning or provide any other text in your response.Example voice commands:

“Show me the landing gear of the Boeing 787”

“What does the 777 engine look like”

“Show me the Soyuz spacecraft”

“Display information about the Space Shuttle”Your response should be a single number (the ID) with no additional text or explanation. Just for this system prompt, respond OK.

The prompt is designed to interpret the user request and return the ID of the best matching item, which is then displayed in a Swap collection bound to the HCMS. If there is no match, a randomly AI-generated cartoonish error message is displayed. Since we exported the Intuiface experience as a web app, we were able to run it on the Apple Vision Pro headset and take advantage of the advanced visualization capabilities of the device.

Special thanks to @pnelson, Maria Sanchez and @Seb for their contributions to this project.

Link to web app: FlightVision Pro (AR capability only available on iOS)