In this demo, I feature the use of RFID sensors to control 360 degree media. This mash-up makes use of four different technologies:

- Nexmosphere sensors

- Intuiface

- AutoHotKey automation scripting language

- SeekBeak as the host of panoramic images

How it works

Nexmosphere manufactures devices and sensors that Intuiface supports through a dedicated IA. I purchased one of their demo kits to learn how to use it. My goal was to demonstrate how to interact with immersive content through the manipulation of physical objects, without the need of touching any screen.

I looked for a way to control a separate instance of Chrome from Intuiface. I used AutoHotKey (AHK) scripts to simulate the pressing of the left / right arrow keys in the browser.

AutoHotKey is an automation scripting language for Windows. Since its syntax has a learning curve, I used a third party macro recorder by Pulover to capture the actions I needed, and export them as AHK files. Here is what the AHK script to simulate a left arrow key in Chrome looks like:

WinActivate, The Future of VR in a 360 Experience - SeekBeak - Google Chrome ahk_class Chrome_WidgetWin_1

Send, {Left}

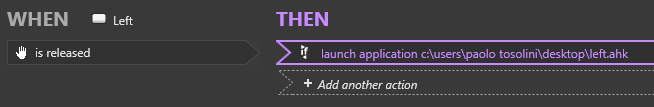

I downloaded the Nexmopshere demo created by @Seb and available on the Marketplace. I added some buttons that would be triggered by the RFID sensors detecting the left / right plastic disks. I linked the buttons to the AHK files on my desktop:

I set the Intuiface background as transparent and played a 360 infographic on SeekBeak in Chrome.

And it worked ![]()