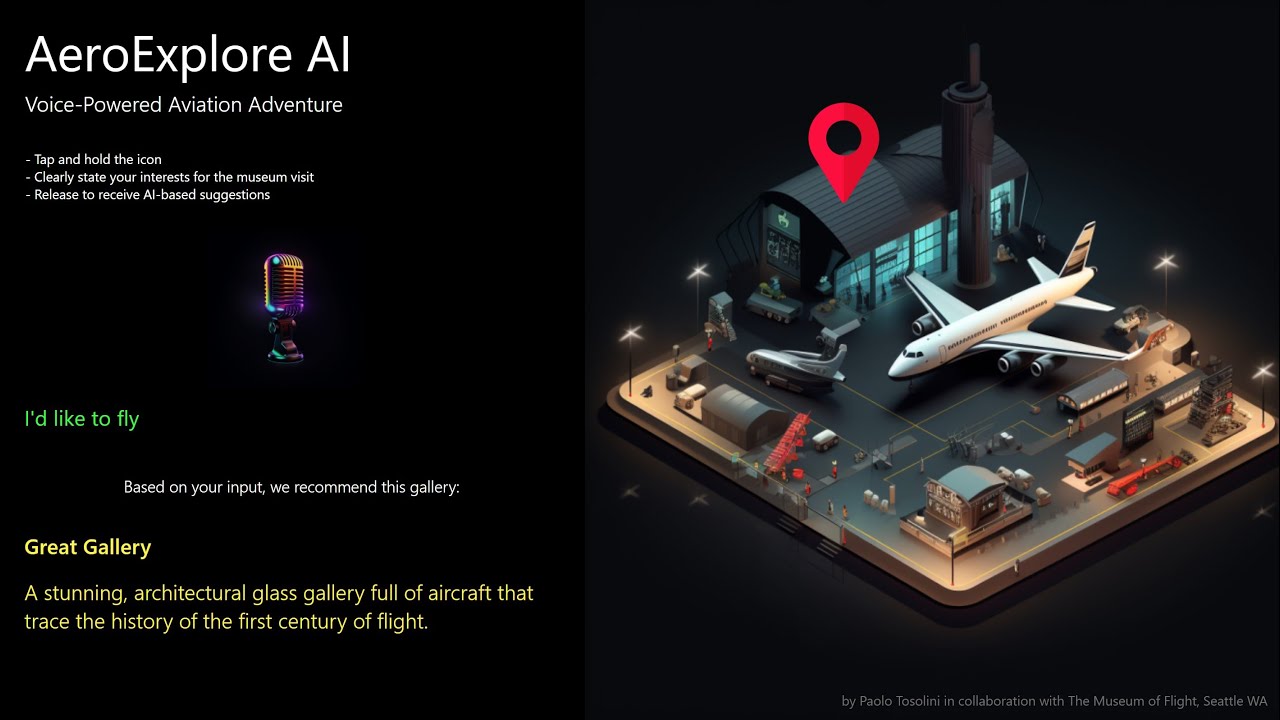

This is a refresh of our original R&D prototype, made possible by two new OpenAI Interface Assets developed by magic @Seb. The goal of this demo remains the same: to allow a user to express an interest in a particular artifact / subject (using voice) and have AI suggest the best matching gallery / exhibit in the museum.

The significant improvements are in the quality of the voice recognition (now achieved through the much more accurate Whisper system) and the ability to interface with OpenAI GPT-4.

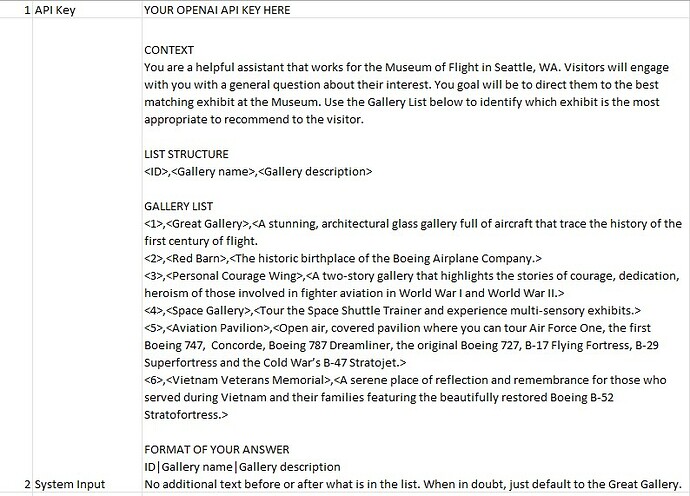

The key information about the museum galleries / exhibits is passed once to GPT-4 through an initial system prompt. Each subsequent input is treated as a follow-up request, resulting in saving tokens (and money), since OpenAI charges you by token usage.

The system prompt also instructs AI to provide the responses in a particular format:

ID|Gallery name|Gallery description

By organizing the response as pipe-delimited text, we can individually extract the ID, Gallery name, and Gallery description. In particular, the ID is being used to automatically turn visibility on/off of the red pins on the map.

Feel free to download this XP and use it in your own projects. The OpenAI GPT-4 and Whisper IA will need to run in Player NextGen mode. Make sure to add your own OpenAI API Key in the Excel.